Have you looked at NVIDIA’s journey lately?

It is the classic story of “overnight success” that actually took 30 years. They went from selling graphics cards to gamers to becoming the most valuable company on earth.

How? They didn’t just jump on a hype train. Years ago, they realized that the complex math used for video games (matrix multiplication) was the exact same math needed for Deep Learning. They understood the foundation.

But look at what is happening today.

We are seeing the exact opposite.

- Students are skipping Linear Algebra classes to learn “LangChain.”

- Job Seekers are ignoring data structures to get an “OpenAI Certification.”

- Corporations are firing employees to buy “AI Solutions” that are just shiny wrappers around a chatbot.

They are trying to build the roof of the house before they have poured the concrete slab.

LinkedIn job postings for “AI Engineering” have spiked, yet a reported 80% of AI projects fail to deliver business value.

Why the Disconnect? The “FOMO” Effect

Because too many people are skipping the hard stuff. They aren’t learning the math, the data science, or the logic behind the models. They are just making API calls to ChatGPT and calling it a day.

This creates a massive “FOMO” (Fear Of Missing Out) effect. Everyone rushes to build the same thing, using the same tools, with the same surface-level knowledge.

If you want a career that lasts longer than the current hype cycle, you need to stop acting like a user and start thinking like an engineer.

The “API Trap”: Why Generic Wrappers Fail

Let’s be honest: Modern AI APIs are seductive. They make complex tasks feel impossibly easy.

Ten years ago: If you wanted to build an app that recognized a Shiba Inu dog, you needed a PhD. You had to collect thousands of images, manually label them, design a Convolutional Neural Network (CNN), and spend weeks training it.

Today: You can create a “Medical Assistant” or a “Legal Bot” in minutes. You just write a few lines of JSON instructions, connect it to GPT-4, and suddenly you have a “product.”

It feels like magic. It feels like engineering. But this is where the trap snaps shut.

The Problem with Generalist Models

The problem isn’t that APIs are bad. The problem is that APIs are Generalists.

Imagine you are building an AI for a specific Law Firm. You use the standard API.

- The Demo: It works great! It summarizes emails perfectly. The client is happy.

- The Real World: The client asks, “Make it cite only New York State case law from 1990-2000, and interpret silence as consent based on this specific legal precedent.”

- The Failure: The API fails.

Why? Because the API was trained to be a “Generic Assistant” for everyone. It wasn’t trained for your specific, messy, complex problem.

Since you only know how to call the API, you are stuck. You cannot reach inside the model to retune the weights. You cannot adjust the training data. You cannot customize the architecture.

You delivered a generic tool to a client who needed a specific solution. That is why the project dies.

The Missing “Data Layer”: Where Intuition is Born

If APIs are the “fast food” of AI, platforms like Kaggle and GitHub are the gym.

For a decade, these were the proving grounds.

- Kaggle taught us how to handle data.

- GitHub taught us how to structure code.

- LeetCode taught us logic.

These platforms taught us a painful truth that API documentation hides: Real-world data is garbage.

It is full of missing values, outliers, biases, and noise.

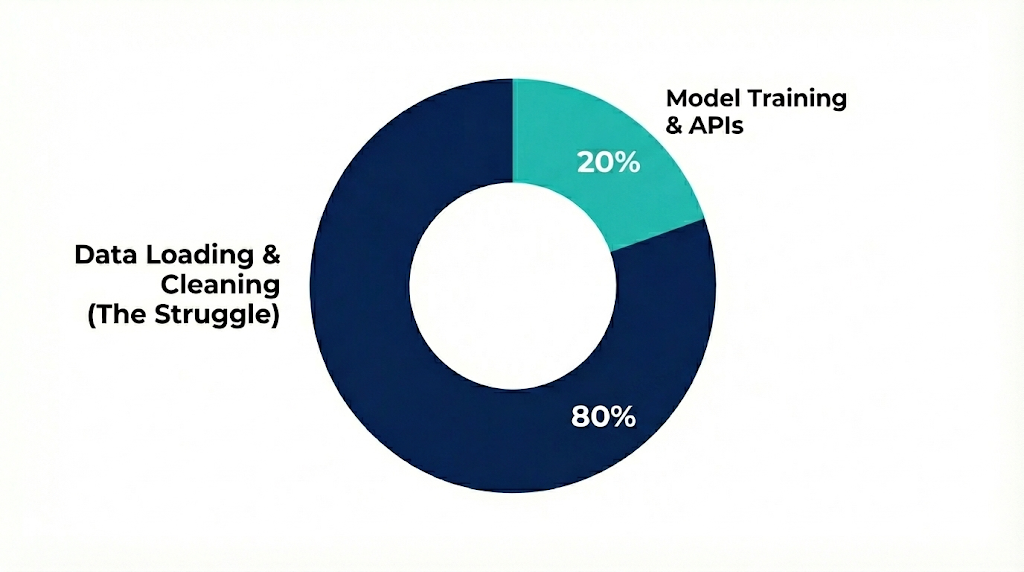

- The Old Way: You spent 80% of your time just cleaning the data. You fought for every 1% increase in accuracy. You learned why a model failed.

- The New Way: You paste a prompt. You get an output. You have no idea what happened in between.

A widely cited survey by Anaconda found that data scientists spend 45-80% of their time loading and cleaning data.

Why does this matter?

Because when you skip the “struggle,” you lose the intuition. You become dependent. If the API works, you look like a genius. If the API hallucinates, you are helpless. You cannot debug a black box if you never learned how to build the box yourself.

The Consequences: Technical Debt & Career Suicide

So, what happens when you build a career or a company—on top of APIs you don’t understand? You create two massive risks.

1. The Corporate Risk: You Have No “Moat”

When you rely 100% on external APIs, you have no competitive advantage.

Ask yourself: “How hard would it be for a competitor to clone my business?”

If your entire “tech stack” is just a prompt inside OpenAI, a competitor can rebuild your entire startup in a weekend. You haven’t built technology; you’ve built a dependency.

Real AI engineering involves building a “Moat.” This means optimizing costs, reducing latency, and perhaps fine-tuning smaller, open-source models (like Llama or Mistral) on your own proprietary data. You can’t do that if you don’t understand the science.

2. The Personal Risk: The Death of the “Prompt Engineer”

Here is a harsh truth: Prompt Engineering is not a career.

Remember when everyone started putting “Prompt Engineer” in their LinkedIn bios? That trend is already dying. Why?

As models get smarter, they understand human intent better. They don’t need your “perfect prompt” anymore.

- The API User: Will be replaced by the AI itself.

- The ML Engineer: Is the one who builds and optimizes the AI.

If your only skill is asking a chatbot questions, you are in a race to the bottom.

The Path Back to Mastery: How to Stop “Using” and Start “Engineering”

It is not too late. The AI revolution is just getting started.

Think of the Dot-com Bubble in the late 90s.

Back then, any company with “.com” in its name got millions in funding. But when the bubble burst, the only companies that survived (like Amazon and Google) were the ones that built real infrastructure and real value.

We are at that same tipping point with AI. The hype is settling. The builders will win.

Here is how you pivot from being an API Consumer to a true AI Engineer.

The High-Level Shift: Build the “Why,” not just the “How”

Stop asking “How do I use this tool?” and start asking “How does this tool work?” If you can’t explain Backpropagation or Gradient Descent, you aren’t ready to deploy a model to production.

The Concrete Roadmap (Start Here):

1. Go Back to Math (Linear Algebra & Stats)

Why: Neural networks are just matrix multiplication and probability. You cannot debug a model if you don’t speak its language.

Resource: Khan Academy (Linear Algebra) or 3Blue1Brown (YouTube) for visual intuition.

2. Get Your Hands Dirty on Kaggle

Why: You need to feel the pain of bad data. Pick a competition that ended 3 years ago. Don’t look at the leaderboard. Just take the raw CSV and try to squeeze a signal out of it.

Resource: Kaggle’s “Titanic” or “House Prices” datasets—the “Hello World” of dirty data.

3. Build from Scratch (The Karpathy Method)

Why: The single best way to learn is to code a neural network in raw Python (no PyTorch, no TensorFlow). When you see the loss function decrease on a model you wrote, you unlock a new level of understanding.

Resource: Andrej Karpathy’s “Neural Networks: Zero to Hero” Series on YouTube. It is the gold standard for understanding how GPT actually works under the hood.

4. Read the Sacred Texts

Why: Everything we use today comes from research papers.

Resource: Read “Attention Is All You Need” (2017). It is the birth of the Transformer. Don’t just skim it; struggle through it until you understand the diagrams.

Conclusion

The era of “easy AI” is a trap. It promises speed but delivers fragility.

If you want to build toys, keep calling APIs. But if you want to build the future and a career that survives the next decade — it is time to close the documentation tab, open a blank Python file, and start learning the fundamentals.

Don’t just ride the wave. Be the ocean.

Struggling with a generic AI wrapper that isn’t delivering results? At Tensour, we specialize in fixing failed AI projects by applying rigorous data science and custom engineering. Book a Technical Audit today.